Deploy DeepSeek-R1 LLM Locally with Ollama and Open WebUI

2nd january 2025

Deploying Large Language Models (LLMs) locally can be challenging, but with DeepSeek-r1, it's now more accessible than ever.

In this blog, I'll guide you through deploying a DeepSeek-r1 model on your local CPU/GPU system. We'll focus on deploying both 1.5B and 7B parameter models, depending on your system's capabilities. We'll also optimize the Ollama server to handle multiple concurrent requests and improve its performance. Finally, we'll set up the Open WebUI for better model interaction and management.

Before diving in, make sure you have the following:

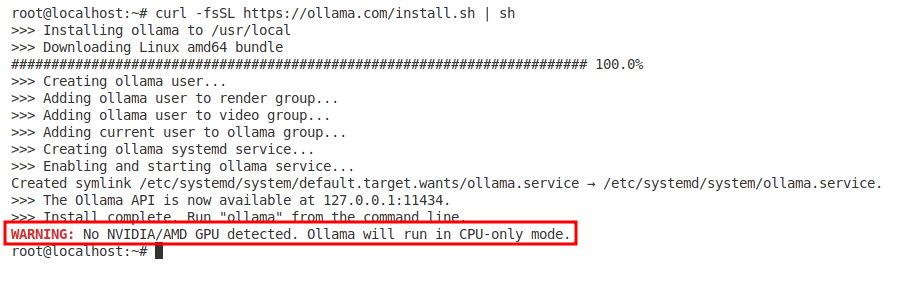

To install Ollama, execute the following command in your terminal to download and run the official installation script:

curl -fsSL https://ollama.com/install.sh | sh

⚠️ Since we are running the model on a CPU-based system this warning may appear which is normal and can be avoided

After running above script, Ollama will be installed on your system. Verify the installation by checking the version:

ollama --version

Now that Ollama is installed, let's pull the DeepSeek-r1 models. You can download both the 1.5B and 7B versions using the Ollama CLI:

ollama pull deepseek-r1

ollama pull deepseek-r1:7bTo verify that both Ollama models are pulled successfully we can run:

ollama list

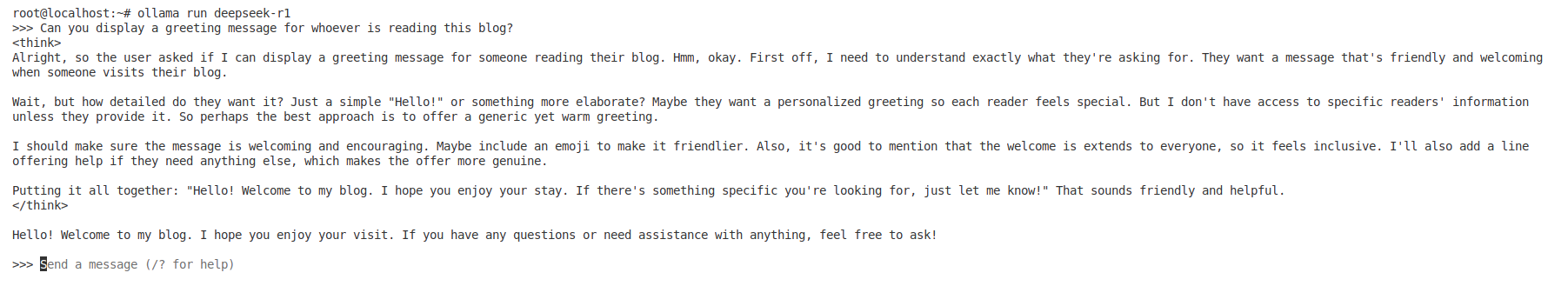

After pulling the models, you can run them locally with Ollama. You can start each model using the following commands:

ollama run deepseek-r1

ollama run deepseek-r1:7b

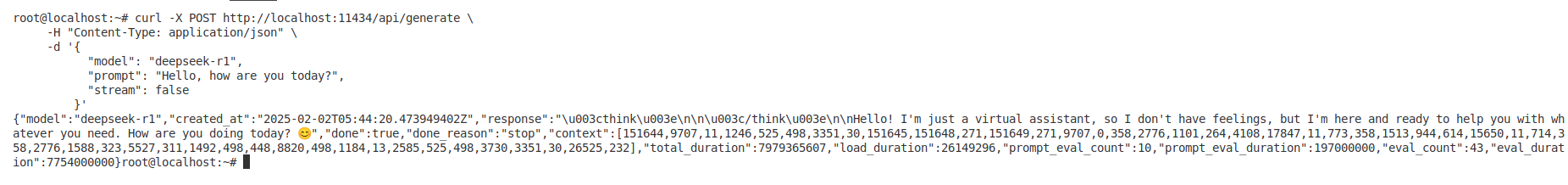

Voila! The model is working. We can also send a query to the model to test if it responds properly:

To simplify interaction with your models, you can deploy a web-based interface using Docker. This allows you to manage and query your models through a clean, browser-based UI.

First, make sure that docker is present in your system if not make sure you install it from here Docker Installation

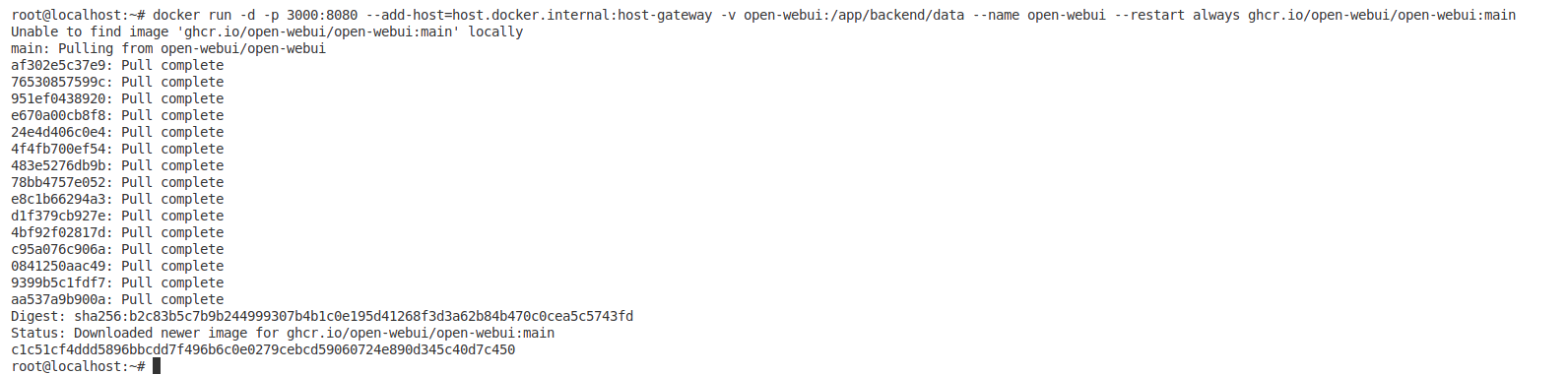

For more efficient model interaction, we'll deploy Open WebUI (formerly known as Ollama Web UI). The following Docker command will map container port 8080 to host port 3000 and ensure the container can communicate with the Ollama server running on your host machine.

Run the following Docker command:

docker run -d -p 3000:8080 --add-host=host.docker.internal:host-gateway -v open-webui:/app/backend/data --name open-webui --restart always ghcr.io/open-webui/open-webui:main

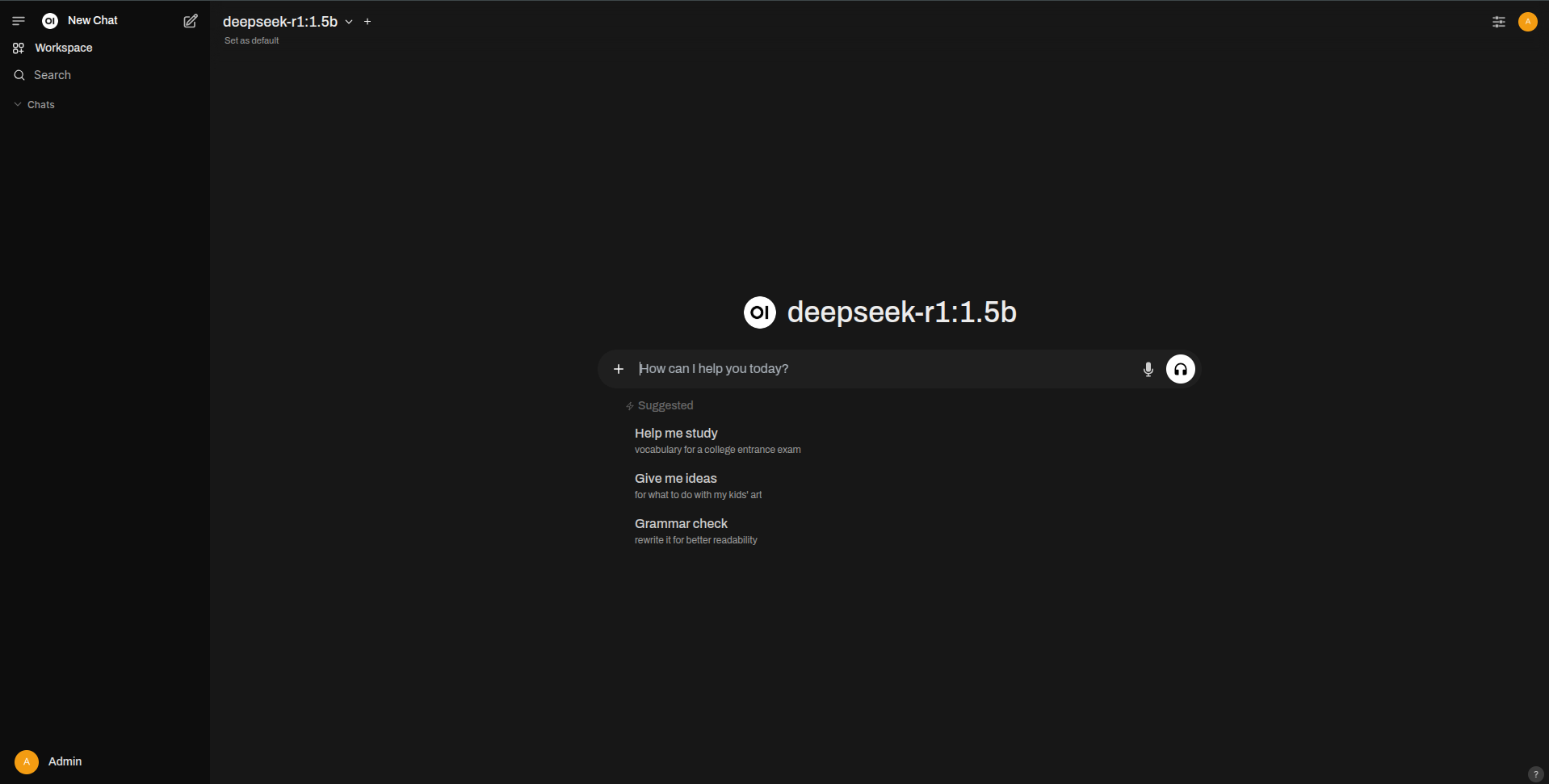

Now, the WebUI has been successfully deployed which can be accessed at

http://localhost:3000

For users looking to squeeze extra performance and customize how Ollama handles model inference and resource management, you can adjust several environment variables before starting the Ollama server.

export OLLAMA_FLASH_ATTENTION=1

export OLLAMA_KV_CACHE_TYPE=q8_0

export OLLAMA_KEEP_ALIVE="-1"

export OLLAMA_MAX_LOADED_MODELS=3

export OLLAMA_NUM_PARALLEL=8

export OLLAMA_MAX_QUEUE=1024q8_0 can help reduce memory footprint while maintaining speed.

-1 disables the timeout.

By following this blog post, you can seamlessly set up your local environment to deploy and interact with the DeepSeek-r1 model using Ollama and Open WebUI. You'll be able to harness both the 1.5B and 7B parameter models to their full potential.

Happy deploying and experimenting with your AI models!